Technological stagnation

Why I came around

by Jason Crawford · January 23, 2021 · 9 min read

“We wanted flying cars, instead we got 140 characters,” says Peter Thiel’s Founders Fund, expressing a sort of jaded disappointment with technological progress. (The fact that the 140 characters have become 280, a 100% increase, does not seem to have impressed him.)

Thiel, along with economists such as Tyler Cowen (The Great Stagnation) and Robert Gordon (The Rise and Fall of American Growth), promotes a “stagnation hypothesis”: that there has been a significant slowdown in scientific, technological, and economic progress in recent decades—say, for a round number, since about 1970, or the last ~50 years.

When I first heard the stagnation hypothesis, I was skeptical. The arguments weren’t convincing to me. But as I studied the history of progress (and looked at the numbers), I slowly came around, and now I’m fairly convinced. So convinced, in fact, that I now seem to be more pessimistic about ending stagnation than some of its original proponents.

In this essay I’ll try to capture both why I was originally skeptical, and also why I changed my mind. If you have heard some of the same arguments that I did, and are skeptical for the same reasons, maybe my framing of the issue will help.

Stagnation is relative

To get one misconception out of the way first: “stagnation” does not mean zero progress. No one is claiming that. There wasn’t zero progress even before the Industrial Revolution (or the civilizations of Europe and Asia would have looked no different in 1700 than they did in the days of nomadic hunter-gatherers, tens of thousands of years ago).

Stagnation just means slower progress. And not even slower than that pre-industrial era, but slower than, roughly, the late 1800s to mid-1900s, when growth rates are said to have peaked.

Because of this, we can’t resolve the issue by pointing to isolated advances. The microwave, the air conditioner, the electronic pacemaker, a new cancer drug—these are great, but they don’t disprove stagnation.

Stagnation is relative, and so to evaluate the hypothesis we must find some way to compare magnitudes. This is difficult.

Only 140 characters?

“We wanted flying cars, instead we got a supercomputer in everyone’s pocket and a global communications network to connect everyone on the planet to each other and to the whole of the world’s knowledge, art, philosophy and culture.” When you put it that way, it doesn’t sound so bad.

Indeed, the digital revolution has been absolutely amazing. It’s up there with electricity, the internal combustion engine, or mass manufacturing: one of the great, fundamental, transformative technologies of the industrial age. (Although admittedly it’s hard to see the effect of computers in the productivity statistics, and I don’t know why.)

But we don’t need to downplay the magnitude of the digital revolution to see stagnation; conversely, proving its importance will not defeat the stagnation hypothesis. Again, stagnation is relative, and we must find some way to compare the current period to those that came before.

Argumentum ad living room

Eric Weinstein proposes a test: “Go into a room and subtract off all of the screens. How do you know you’re not in 1973, but for issues of design?”

This too I found unconvincing. It felt like a weak thought experiment that relied too much on intuition, revealing one’s own priors more than anything else. And why should we necessarily expect progress to be visible directly from the home or office? Maybe it is happening in specialized environments that the layman wouldn’t have much intuition about: in the factory, the power plant, the agricultural field, the hospital, the oil rig, the cargo ship, the research lab.

No progress except for all the progress

There’s also that sleight of hand: “subtract the screens”. A starker form of this argument is: “except for computers and the Internet, our economy has been relatively stagnant.” Well, sure: if you carve out all the progress, what remains is stagnation.

Would we even expect progress to be evenly distributed across all domains? Any one technology follows an S-curve: a slow start, followed by rapid expansion, then a leveling off in maturity. It’s not a sign of stagnation that after the world became electrified, electrical power technology wasn’t a high-growth area like it had been in the early 1900s. That’s not how progress works. Instead, we are constantly turning our attention to new frontiers. If that’s the case, you can’t carve out the frontiers and then say, “well, except for the frontiers, we’re stagnating”.

Bit bigotry?

In an interview with Cowen, Thiel says stagnation is “in the world of atoms, not bits”:

I think we’ve had a lot of innovation in computers, information technology, Internet, mobile Internet in the world of bits. Not so much in the world of atoms, supersonic travel, space travel, new forms of energy, new forms of medicine, new medical devices, etc.

But again, why should we expect it to be different? Maybe bits are just the current frontier. And what’s the matter with bits, anyway? Are they less important than atoms? Progress in any field is still progress.

The quantitative case

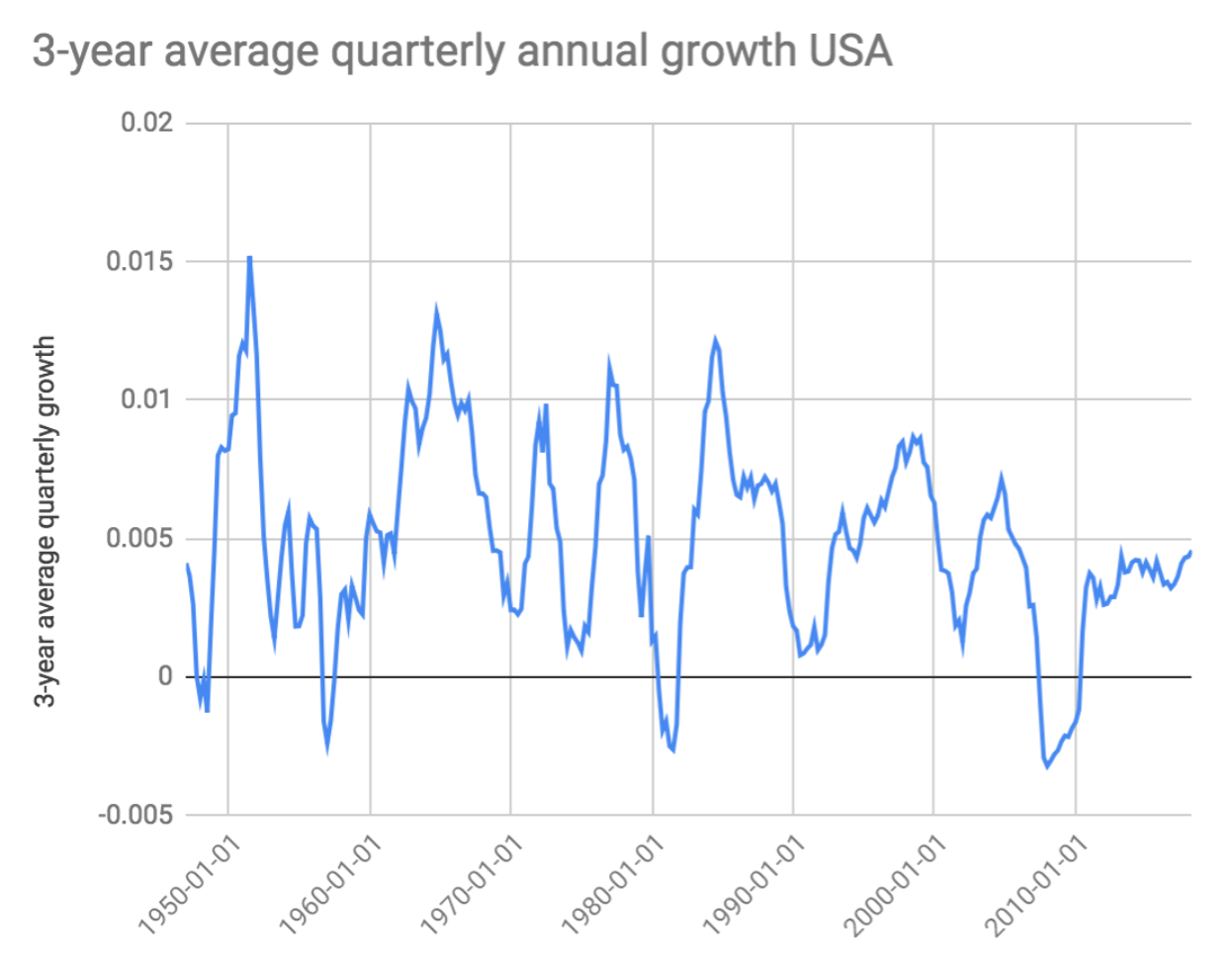

So, we need more than isolated anecdotes, or appeals to intuition. A more rigorous case for stagnation can be made quantitatively. A paper by Cowen and Ben Southwood quotes Gordon: “U.S. economic growth slowed by more than half from 3.2 percent per year during 1970-2006 to only 1.4 percent during 2006-2016.” Or look at this chart from the same paper:

Gordon’s own book points out that growth in output per hour has slowed from an average annual rate of 2.82% in the period 1920-1970, to 1.62% in 1970-2014. He also analyzes TFP (total factor productivity, a residual calculated by subtracting out increases in capital and labor from GDP growth; what remains is assumed to represent productivity growth from technology). Annual TFP growth was 1.89% from 1920-1970, but less than 1% in every decade since. (More detail in my review of Gordon’s book.)

Analyzing growth quantitatively is hard, and these conclusions are disputed. GDP is problematic (and these authors acknowledge this). In particular, it does not capture consumer surplus: since you don’t pay for articles on Wikipedia, searches on Google, or entertainment on YouTube, a shift to these services away from paid ones actually shrinks GDP, but it represents progress and consumer benefit.

Gordon, however, points out that GDP has never captured consumer surplus, and there has been plenty of surplus in the past. So if you want to argue that unmeasured surplus is the cause of an apparent (but not a real) decline in growth rates, then you have to argue that GDP has been systematically increasingly mismeasured over time.

So far, I’ve only heard one only argument that even hints in this direction: the shift from manufacturing to services. If services are more mismeasured than manufactured products, then in logic at least this could account for an illusory slowdown. But I’ve never seen this argument fully developed.

In any case, the quantitative argument is not what convinced me of the stagnation hypothesis nearly as much as the qualitative one.

Sustaining multiple fronts

I remember the first time I thought there might really be something to the stagnation hypothesis: it was when I started mapping out a timeline of major inventions in each main area of industry.

At a high level, I think of technology/industry in six major categories:

- Manufacturing & construction

- Agriculture

- Energy

- Transportation

- Information

- Medicine

Almost every significant advance or technology can be classified in one of these buckets (with a few exceptions, such as finance and perhaps retail).

The first phase of the industrial era, sometimes called “the first Industrial Revolution”, from the 1700s through the mid-1800s, consisted mainly of two fundamental advances: mechanization, and the steam engine. The factory system evolved in part out of the former, and the locomotive was based on the latter. Together, these revolutionized manufacturing, energy, and transportation, and began to transform agriculture as well.

The “second Industrial Revolution”, from the mid-1800s to the mid-1900s, is characterized by a greater influence of science: mainly chemistry, electromagnetism, and microbiology. Applied chemistry gave us better materials, from Bessemer steel to plastic, and synthetic fertilizers and pesticides. It also gave us processes to refine petroleum, enabling the oil boom; this led to the internal combustion engine, and the vehicles based on it—cars, planes, and oil-burning ships—that still dominate transportation today. Physics gave us the electrical industry, including generators, motors, and the light bulb; and electronic communications, from the telegraph and telephone through radio and television. And biology gave us the germ theory, which dramatically reduced infectious disease mortality rates through improvements in sanitation, new vaccines, and towards the end of this period, antibiotics. So every single one of the six major categories was completely transformed.

Since then, the “third Industrial Revolution”, starting in the mid-1900s, has mostly seen fundamental advances in a single area: electronic computing and communications. If you date it from 1970, there has really been nothing comparable in manufacturing, agriculture, energy, transportation, or medicine—again, not that these areas have seen zero progress, simply that they’ve seen less-than-revolutionary progress. Computers have completely transformed all of information processing and communications, while there have been no new types of materials, vehicles, fuels, engines, etc. The closest candidates I can see are containerization in shipping, which revolutionized cargo but did nothing for passenger travel; and genetic engineering, which has given us a few big wins but hasn’t reached nearly its full potential yet.

The digital revolution has had echoes, derivative effects, in the other areas, of course: computers now help to control machines in all of those areas, and to plan and optimize processes. But those secondary effects existed in previous eras, too, along with primary effects. In the third Industrial Revolution we only have primary effects in one area.

So, making a very rough count of revolutionary technologies, there were:

- 3 in IR1: mechanization, steam power, the locomotive

- 5 in IR2: oil + internal combustion, electric power, electronic communications, industrial chemistry, germ theory

- 1 in IR3 (so far): computing + digital communications

It’s not that bits don’t matter, or that the computer revolution isn’t transformative. It’s that in previous eras we saw breakthroughs across the board. It’s that we went from five simultaneous technology revolutions to one.

The missing revolutions

The picture becomes starker when we look at the technologies that were promised, but never arrived or haven’t come to fruition yet; or those that were launched, but aborted or stunted. If manufacturing, agriculture, etc. weren’t transformed, then how could they have been?

Energy: The most obvious stunted technology is nuclear power. In the 1950s, everyone expected a nuclear future. Today, nuclear supplies less than 20% of US electricity and only about 8% of its total energy (and about half those figures in the world at large). Arguably, we should have had nuclear homes, cars and batteries by now.

Transportation: In 1969, Apollo 11 landed on the Moon and Concorde took its first supersonic test flight. But they were not followed by a thriving space transportation industry or broadly available supersonic passenger travel. The last Apollo mission flew in 1972, a mere three years later. Concorde was only ever available as a luxury for the elite, was never highly profitable, and was shut down in 2003, after less than thirty years in service. Meanwhile, passenger travel speeds are unchanged over 50 years (actually slightly reduced). And of course, flying cars are still the stuff of science fiction. Self-driving cars may be just around the corner, but haven’t arrived yet.

Medicine: Cancer and heart disease are still the top causes of death. Solving even one of these, the way we have mostly solved infectious disease and vitamin deficiencies, would have counted as a major breakthrough. Genetic engineering, again, has shown a few excellent early results, but hasn’t yet transformed medicine.

Manufacturing: In materials, carbon nanotubes and other nanomaterials are still mostly a research project, and we still have no material to build a space elevator or a space pier. As for processes, atomically precise manufacturing is even more science-fiction than flying cars.

If we had gotten even a few of the above, the last 50 years would seem a lot less stagnant.

One to zero

This year, the computer turns 75 years old, and the microprocessor turns 50. Digital technology is due to level off in its maturity phase.

So what comes next? The only thing worse than going from five simultaneous technological revolutions to one, is going from one to zero.

I am hopeful for genetic engineering. The ability to fully understand and control biology obviously has enormous potential. With it, we could cure disease, extend human lifespan, and augment strength and intelligence. We’ve made a good start with recombinant DNA technology, which gave us synthetic biologics such as insulin, and CRISPR is a major advance on top of that. The rapid success of two different mRNA-based covid vaccines is also a breakthrough, and a sign that a real genetic revolution might be just around the corner.

But genetic engineering is also subject to many of the forces of stagnation: research funding via a centralized bureaucracy, a hyper-cautious regulatory environment, and a cultural perception of something scary and dangerous. So it is not guaranteed to arrive. Without the right support and protection, we might be looking back on biotech from the year 2070 the way we look back on nuclear energy now, wondering why we never got a genetic cure for cancer and why life expectancy has plateaued.

Aiming higher

None of this is to downplay the importance or impact of any specific innovation, nor to discourage any inventor, present or future. Quite the opposite! It is to encourage us to set our sights still higher.

Now that I understand what was possible around the turn of the last century, I can’t settle for anything less. We need breadth in progress, as well as depth. We need revolutions on all fronts at once: not only biotech but manufacturing, energy and transportation as well. We need progress in bits, atoms, cells, and joules.

Comment on

Social media link image credit: Founders Fund